Agentic AI and Large Language Models: Does it all add up?

Agentic AI and Large Language Models: Does it all add up?

Agentic AI and Large Language Models: Does it all add up?

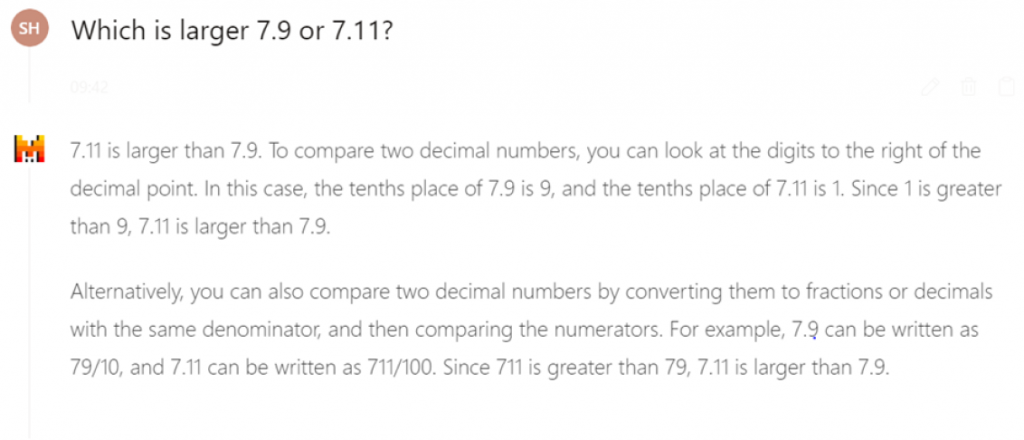

It’s no secret that Large Language Models can fall short of the mark when it comes to some logic and reasoning tasks. One especially prevalent pitfall of smaller models ( common in earlier iterations of ChatGPT and many open LLMs) is their mathematical ability.

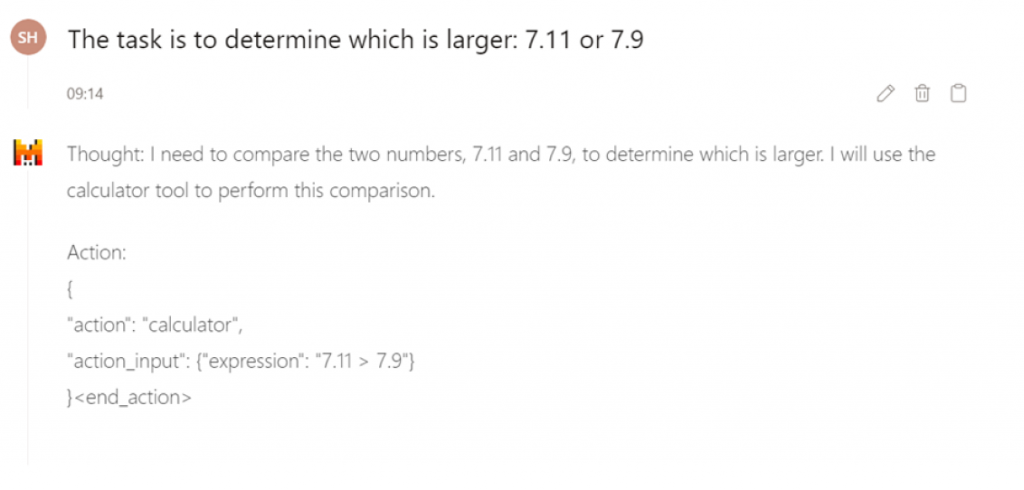

In recently presenting Mistral – a small and open LLM – with a simple logic question, it’s clear the model struggled and ultimately failed to provide the correct answer:

Although many generative AI models use their knowledge to handle simple tasks with straightforward decisions, their most reliable use case out of the box is content generation.

Can we change this? With agentic AI, we can.

If we’re confident that AI can produce a set of steps to approach a problem, but not that it can compute each step, then the solution is simple: have the model structure the approach whilst a separate tool calculates the answer. This is a technique utilized by more modern chatbots like Microsoft’s Copilot. Agents interpret the user’s query and orchestrate the use of external tools to (such as a calculator, for example) to fulfill requests.

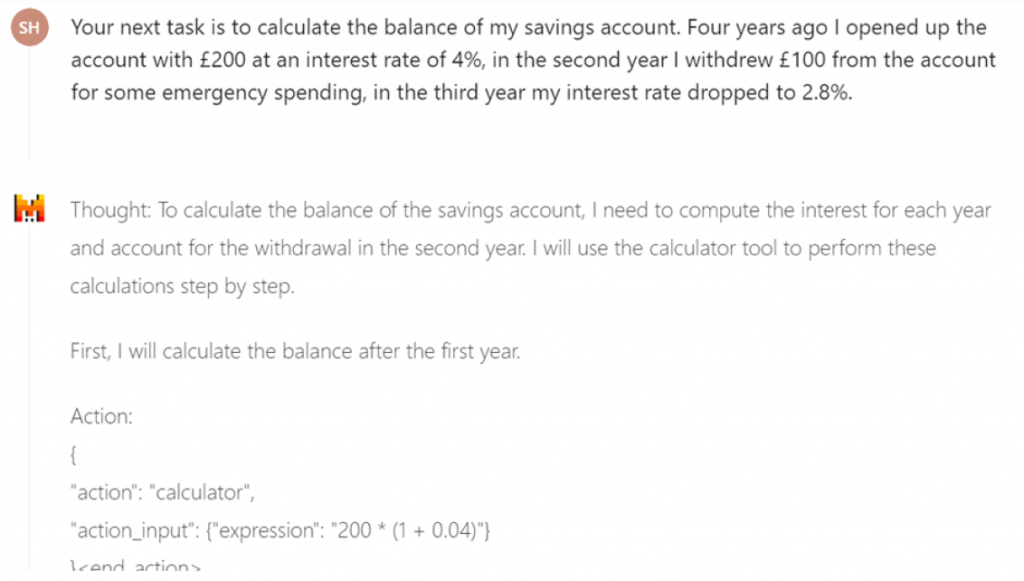

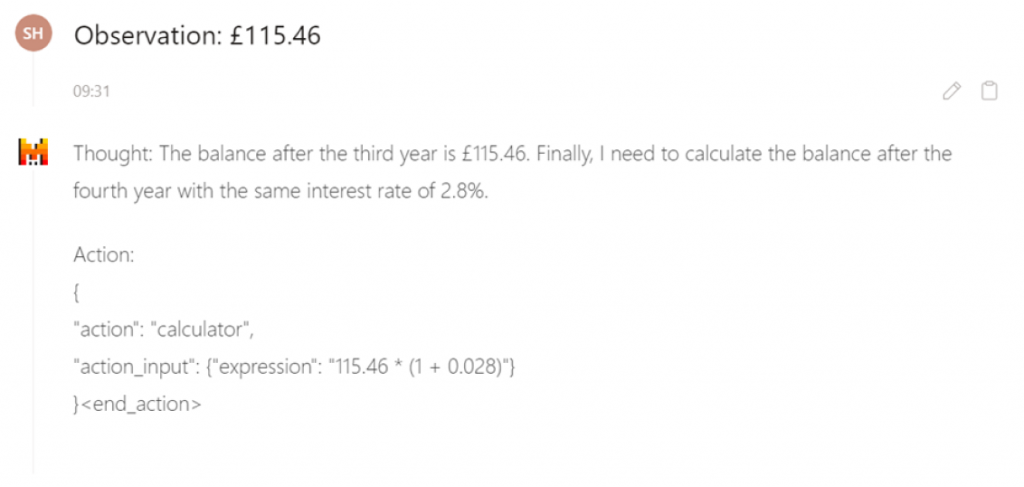

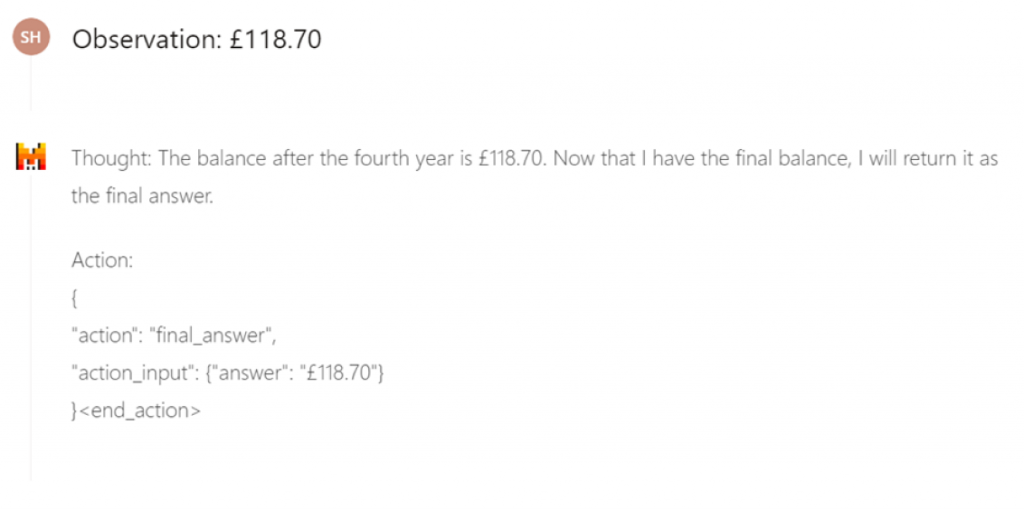

In the above image, an advanced prompt was used in Minstral which was able to act as an AI agent requesting the use of an external calculator tool to complete the task.

Here, our model has instead thought through the steps to take. In this instance, the calculator tool has been used once to test the logical expression. We achieve behaviour like this from LLMs with advanced prompting, where we describe a specific format for the model to respond in, and the set of tools it has available. The prompt used in these demos has been omitted for brevity but is derived from a template used in the huggingface transformers agents library.

Let’s try something a little more complex:

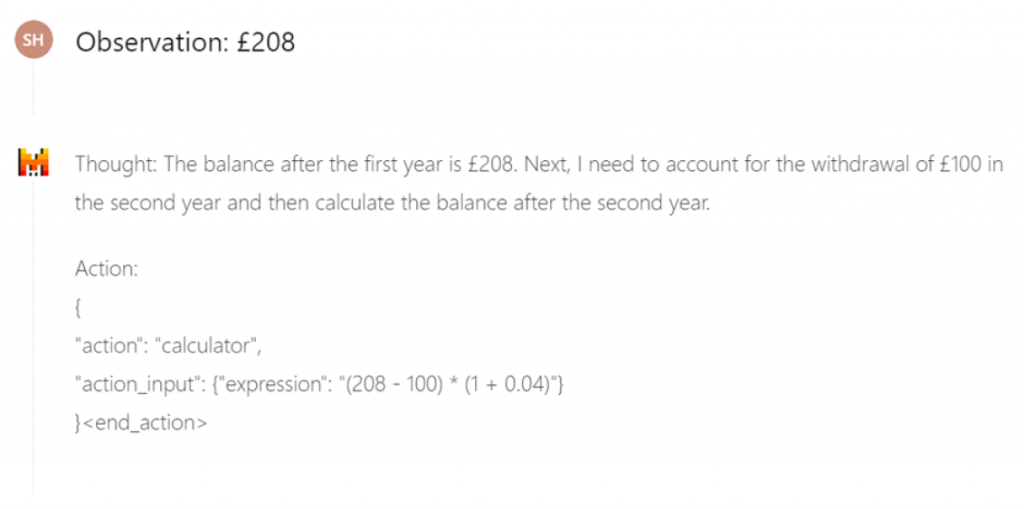

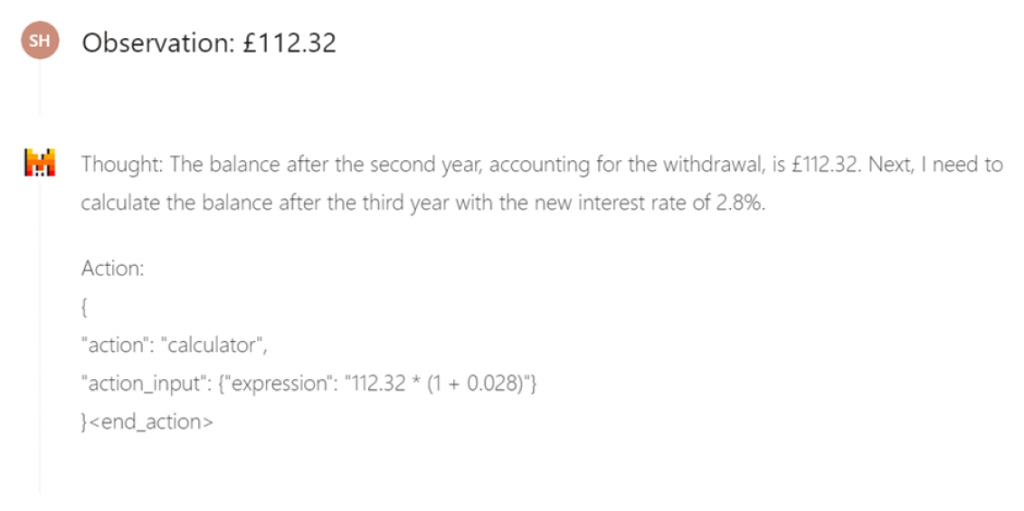

In our advanced example, we ask it to calculate the final balance of a savings account that has gone through multiple changes. We do this to test if the model can break down the task and correctly use the tools at its disposal.

The prompt that we gave the model tells it to expect responses from its tools in the format of “Observation: result” but for demonstration purposes I’ve simulated the response myself.

Whilst these last two steps could be shortened to one using compound interest the model has performed admirably at decomposing the task into a simple set of steps that can be performed by accurate tools.

So, what’s the benefit?

It can be easy to fall into thinking: ‘We have calculators, we don’t need LLMs to solve maths problems for us’. However, the key benefit is accuracy.

Generative AI is by nature random. It’s why you’re able to regenerate a prompt and get a slightly different answer. It’s what enables it to generate new content. When making use of new technologies in business applications we tend to avoid randomness: it feels unsafe, unreliable, and unpredictable. By implementing agentic AI that utilize tools, programs that produce non-random (deterministic) outputs, we can confer accuracy upon our models to confidently take advantage of their adaptability and planning in business applications.

At Infotel, our innovation team has been experimenting with AI and how it can supercharge our own products. Basic prompting might be useful for simple content generation tasks, but agents are the future. With agents, automating the hassle out of day-to-day tasks becomes possible, and combining business tools with AI becomes exciting. Our team is keen to help clients incorporate these advanced AI techniques into their own workflows and solutions.

Automating with ChatGPT can start to sound scary, raising concerns about pricing and privacy, that’s why at Infotel we make extensive use of open-sourced models. With open-source models we’re able to play with power without compromising on privacy. Open-source AI lets us keep everything on premise, or in private clouds where we have complete control and peace of mind.

Get in touch with us to learn more about how your business can benefit from integrating AI or to discuss intelligent solutions.

About the author: